The algorithm for probabilistically embedding metric spaces into trees has numerous theoretical applications. It is a key tool in the design of many approximation algorithms and online algorithms. Today we will illustrate the usefulness of these trees in designing an algorithm for the online Steiner tree problem.

1. Online Steiner Tree

Let be a graph and let

be lengths on the edges. Let

be the shortest path metric on

.

For any , a Steiner tree is a subtree of

that spans (i.e., contains) all vertices in

, but does not necessarily span all of

. The vertices in

are called “terminals”. Equivalently, we can define a Steiner tree to be an acyclic, connected subgraph of

that spans all of

. Computing a minimum-length Steiner tree is NP-hard.

Today we consider the problem of constructing a Steiner tree in an online setting. There is a sequence of time steps. In each time step

, we are given a vertex

. Our algorithm must then choose a connected subgraph

of

which spans

(and possibly other vertices). The objective is to minimize the total length

. Since we only care about the cost of the union of the

‘s, we may assume without loss of generality that

. There is no restriction on computation time of the algorithm.

Remark. The ‘s are not actually Steiner trees because we did not insist that they are acyclic. If trees are desired, one could remove cycles from each

arbitrarily. This is equivalent to the problem that we stated above.

If we knew the terminal set in advance then the problem is trivial. The algorithm could compute in exponential time the minimum-length Steiner tree

spanning

, then set

in every time step. Unfortunately the algorithm does not know

in advance. Instead, our goal will be for the algorithm to behave almost as well as if it did know

. Formally, define competitive ratio of the algorithm to be the ratio

We want our algorithm to have small competitive ratio.

Theorem 1 There is a randomized, polynomial time algorithm with expected competitive ratio

.

I think this is optimal but I could not find a definitive reference. For very similar problems, Alon & Azar prove a lower bound and Imase & Waxman prove a

lower bound.

1.1. The Algorithm

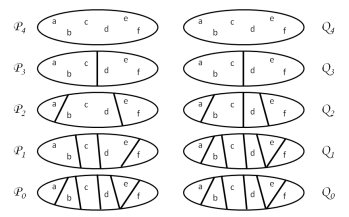

The main idea is to use algorithm of the last lecture to approximate the metric by a tree

with edge lengths

. Let

be the corresponding distance function on

. Recall that the leaves of

are identified with the vertices in

. The algorithm will then build a sequence of Steiner trees

that are subtrees of

, where each

spans

. This is trivial: since

is itself a tree, there is really only one reasonable choice for what

should be. We set

to be the unique minimal subtree of

that spans

.

Remark. This step of the algorithm illustrates the usefulness of probabilistically approximating the metric by a tree. Many problems can be solved either trivially or by very simple algorithms, when the underlying graph is a tree.

Clearly . We would like to understand how the length of our final Steiner tree

compares to the optimal Steiner tree

.

Unfortunately the tree itself isn’t a solution to our problem. Recall that

is not a subtree of

: the construction of

required adding extra vertices and edges. So

is not a subtree of

either. To obtain our actual solution, we will see below how to use the trees

to guide our construction of the desired subgraphs

of

.

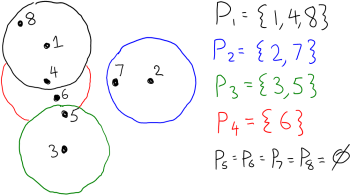

Proof: (of Claim 2). Let be an ordering of the terminals given by a depth-first traversal of

. Equivalently, let

denote the graph obtained from

by replacing every edge with two parallel copies. Perform an Euler tour of

, and let

be the order in which the terminals are visited.

The Euler tour traverses every edge of exactly once, so

is exactly half the length of the Euler tour. Thus

Now consider performing a walk through , visiting the terminals in the order given by

. Since this walk visits every leaf of

, it is a traversal of the tree, and hence it crosses every edge at least once. (In fact, it is an Eulerian walk, so it crosses every edge at least twice.)

Remark. The analysis in (3) illustrates why our random tree is so useful. The quantity that we’re trying to analyze (namely

) is bounded by a linear function of some distances in the tree (namely

). Because we can bound

by

, and because of linearity of expectation, we obtain a bound on

involving distances in

.

Now let us explain how the algorithm actually solves the online Steiner tree problem. It will maintain a sequence of subgraphs of

such that each

spans

. Initially

. Then at every subsequent time step

, we do the following.

- Let

be the closest vertex to

amongst

, using distances in

. In other words,

.

- Let

be a shortest path in

from

to

.

- Let

.

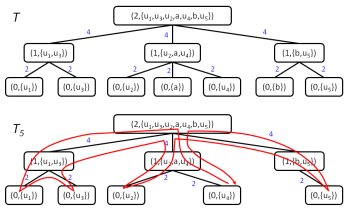

The trees described above can also be viewed in this iterative fashion. Initially

. Then at every subsequent time step

, we do the following.

- Let

be the unique path in

from

to the closest vertex in

.

- Let

.

The following important claim relates and

.

Proof: Let be the closest vertex in

to

, so

is a

–

path. The vertex

would only be added to

if some leaf

beneath

belongs to

. By the choice of weights in

, the weight of the

–

path is no longer than the weight of the

–

path. Thus

for all

. Consequently

as required.

Consequently,

since is the union of the

paths and

is the disjoint union of the

paths. So

by Claim 2. This proves that the expected competitive ratio is .